In an industrial park west of Stockholm, Sweden, is situated one of the world’s most efficient computer data centres. DigiPlex’s Upplands Väsby facility has delivered a power usage effectiveness (PUE) of just 1.06 – a notable performance. PUE is the measure of how efficiently a data centre uses energy; it is the ratio of energy used to energy delivered to the computing equipment. The average data centre PUE is 1.7.

The performance of the Stockholm facility is all the more impressive, given that 0.03 of the PUE is attributable to losses in the uninterruptible power supply system. ‘The data centre is using just 3% of the power consumed by the servers in keeping them cool,’ says Geoff Fox, DigiPlex’s chief innovation and engineering officer.

The data centre uses just 3% of the power consumed by the servers in keeping them cool

The new complex is part of a growing trend to locate data centres in the Nordic region, to take advantage of its climate and readily available, clean, hydro-electric power. Like many other data centres located here, the 6,000m2 Upplands Väsby facility is cooled by more than 60 indirect evaporative cooling (IEC) units. These highly efficient units deliver ‘free’ cooling using process fans alone for most of the year. However, unlike other data centres in the region, the performance of the IEC units at DigiPlex’s site has been optimised using an innovative control algorithm developed by the company.

Called Concert Control, the algorithm enables normally separate control systems to work in concert with each other, to reduce the combined infrastructure energy load. This is in contrast to more conventional plant-control systems in which mechanical and electrical plant often operate autonomously, which wastes energy.

The company calculates that its solution will return an additional 10% in energy savings, on top of the savings delivered by cooling the data centre using indirect evaporative coolers – which could be as much as £50,000 per year.

Fox and his team developed the algorithm from first principles, using established building management system (BMS) controls techniques. To understand how it works, it is first helpful to have a basic understanding of the Upplands Väsby data centre’s cooling system.

Inside the facility a series of halls house hundreds of heat-generating, data-processing units called servers. These are mounted in vertical racks, set out in lines to form a series of aisles. A ‘chimney’ is attached to the rear of each rack, to duct the hot air generated by the servers to a sealed, structural false ceiling. The use of chimney racks, and the sealed-ceiling configuration to remove hot air, allows the data hall to become a reservoir of cool air.

The IEC units supply cooled air to the data hall at a high level and at a very low velocity. Fans integral to each server then pull air from this chilled reservoir through the servers. The air – now warmed by up to 12K – is discharged from the rear of the server into the exhaust chimney, and then to the IEC units via the ceiling plenum.

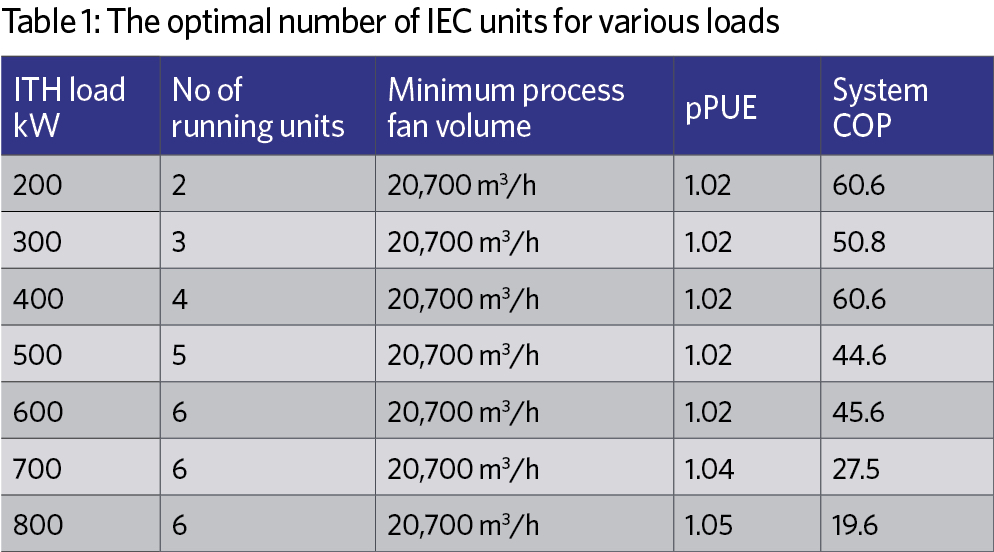

The table indicates how many IEC units run at different load scenarios. It also illustrates the impact of the limited fan turn-down at both the 300kW and 500kW loads. The system coefficient of performance (COP) is the ratio of kilowatts of power in to kilowatts of cooling out. In both instances, the system COP is less than is the case where the load has actually increased by an additional 100kW. A lower fan turn-down speed would have enabled an additional IEC unit to run, increasing the cooling efficiency. DigiPlex is currently working with Munters to achieve lower minimum speeds for the IEC fans, to further optimise the system

Although the IEC units are a highly efficient way to deliver cooling, the system has no mechanism to interface cooling supply with cooling demand directly. Nor can it link the dynamic inputs – such as outdoor and indoor air conditions, and IEC unit process fan speed – to the cooling performance of the unit. The control algorithm was developed to resolve these shortfalls and to optimise the system’s performance.

The beauty of Concert Control is its simplicity; the algorithm references the server electrical load directly, and uses the specific volume, specific heat capacity of the air, and the temperature rise (K) across

the servers to calculate the mass flow rate of air that needs to be delivered by the IEC units.

The algorithm has a unique way of establishing the server cooling demand. There is no control over the power consumed by customers’ servers but, by metering, the company knows exactly how much power the servers are consuming at a particular point in time – and so how much heat the cooling system has to remove. The higher the processing load on the server, the more power it will consume and the greater its need for cooling.

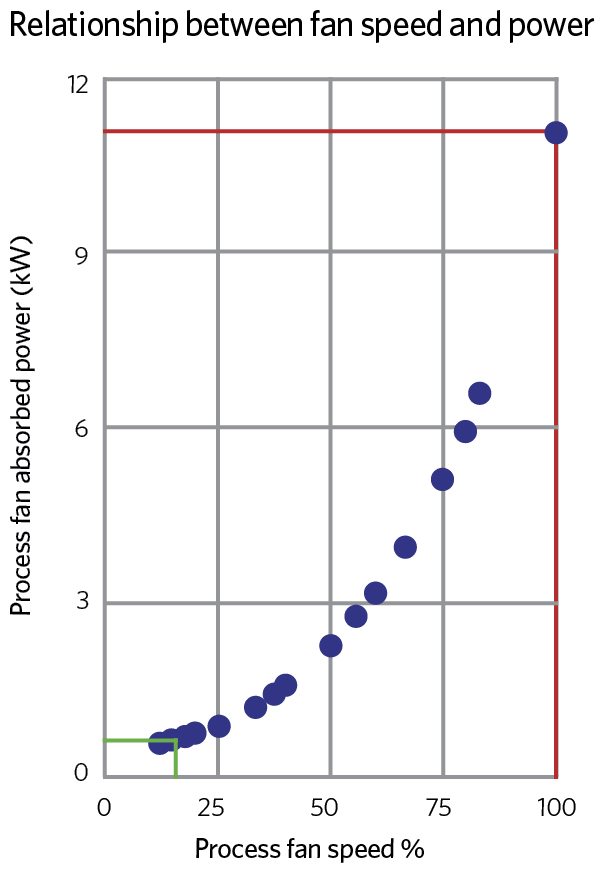

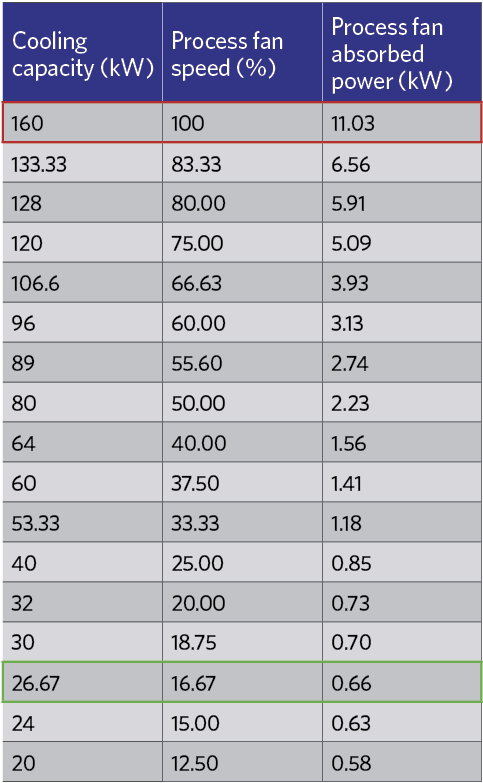

At lower fan speeds the power drawn by the fan motor drops dramatically. The worked example for a nominal 160kW of IT heat load, shows that if the cooling is provided by one indirect evaporative cooling (IEC) unit, with its process fan running at full speed (100%), then power consumed by the fan motor will be 11.03kW. But, if cooling is provided by six IEC units, with their fans running at 16.6% capacity, then the total power consumed by all six fan motors is only 3.96kW – a 74% reduction in fan power consumed

‘This control technique uses real-time energy consumption of the servers – taken from the power-management system – as a reference point; it then varies the speed of the process fans in the IEC units to match the supply air volume to the cooling load by referencing the fan curve characteristics,’ explains Fox. The advantage of precisely matching the supply air volume to server power consumption is that it enables a very low pressure differential to be maintained between the cold aisle and the rack chimneys. This almost eliminates air leakage between the two to prevent recirculation.

Knowing the volume of cooled air required to cool the servers, the algorithm then calculates the optimal way to supply this air. DigiPlex co-invested in an environmental chamber and data-hall mock-up at Munters’ works. This enabled prototype units to be tested for a full range of environmental conditions and process loads, to gain an in-depth understanding of the unit’s performance. The algorithm references the fan curves to decide, automatically, the most economic number of IEC units to run and the fan speed at which they will operate to optimise their efficiency. The fan affinity laws determine that fan power is proportional to the cube of rotational fan speed, so – at lower fan speeds – the power drawn by the motor drops dramatically. The detailed modelling provided the algorithm with data to tell it when it is most energy efficient to ramp up or down the IEC units’ fans, and when it is more energy efficient to turn individual units on and off.

The algorithm also factors in the limitations of the IEC units, which have a minimum process fan speed. ‘Contrary to most operational standpoints, the modelling demonstrated that, typically, it is more energy efficient to run all of the IEC units together at a low speed than to run fewer units at a higher fan speed,’ Fox says. (See Table 1.)

Because the power drawn by the motor drops dramatically at lower fan speeds, a key benefit of this characteristic is that Concert Control is efficient at low-load conditions, with no loss of performance when, for example, a data centre first opens.

This control has proved effective in delivering energy-efficiency improvements. The system was tested at the Stockholm data centre on a data hall incorporating six IEC units and an 800kW simulated server load, to stress the plant and systems. It was during these tests that the PUE of 1.06 was recorded, at an external ambient dry-bulb temperature of 15K above the yearly average temperature. Power draw from the IEC unit’s scavenger fans is reduced by running the heat exchanger wet whenever the external temperature is above 5°C. Water usage is not an issue because the system uses rainwater harvested from the roof for the evaporative/adiabatic cooling process.

‘The principle behind matching the air volume supplied by the cooling plant to the server electrical loads holds good for all conventional data centre cooling applications, wherever they are located,’ says Fox. His company is currently in the process of deploying this control method with a chiller-based system and a computer room air conditioning (CRAC) unit, free cooling CRAC units and adiabatic coolers in one of its legacy data centres, where it has historic energy consumption data. It expects to achieve savings of up to 10% on mechanical cooling power consumption, as a result of this and other system upgrades.

The company has also started to look at the cost benefit of chilled water temperature adjustment, compared with chilled water volume control, with a view to developing a second-generation control strategy for centralised plant.